Diving Deeper into Kubernetes Workloads: StatefulSets, DaemonSets, and Jobs

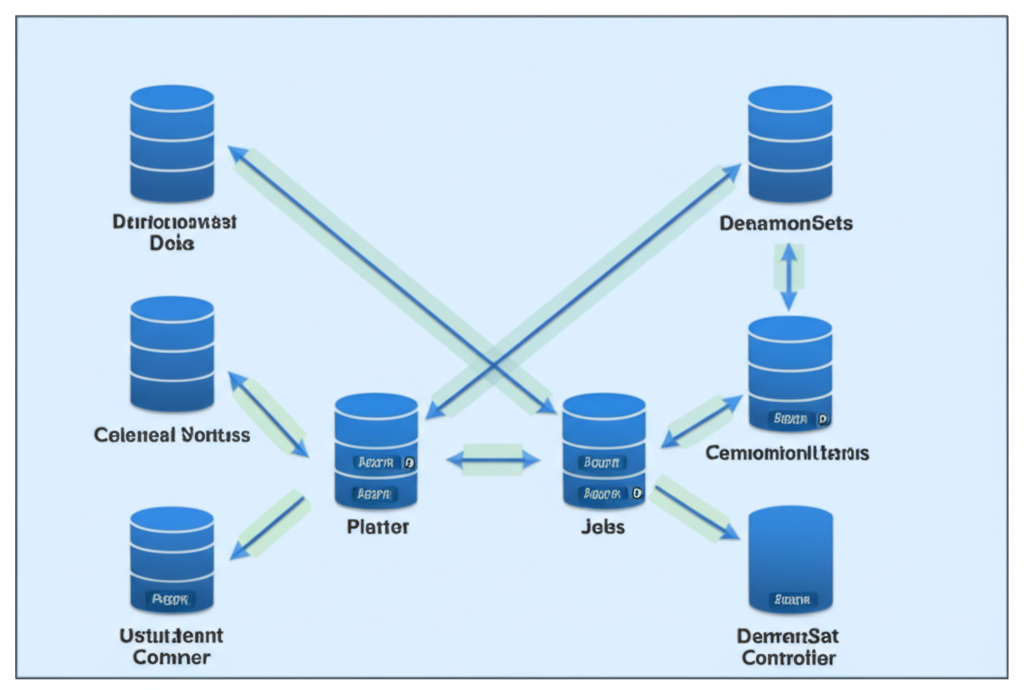

In our Kubernetes journey, we’ve already explored fundamental concepts like Pods and Deployments. Now, let’s expand our understanding by diving into three more specialized workload controllers: StatefulSets, DaemonSets, and Jobs. These controllers are essential for managing different types of applications and tasks within your cluster. We’ll break down each one in simple terms and highlight their key use cases.

1. StatefulSets: For Order and Persistent Identity

Imagine you’re running a database like PostgreSQL or a distributed data store like ZooKeeper. These applications need stable network identities, persistent storage, and ordered, graceful scaling. This is where StatefulSets come into play.

Key Characteristics of StatefulSets:

- Stable, Unique Network Identities: Each Pod in a StatefulSet gets a unique, persistent hostname (e.g.,

my-app-0,my-app-1). This identity persists even if the Pod is rescheduled. - Persistent Storage: StatefulSets are designed to work with Persistent Volumes. Each Pod can be associated with its own dedicated persistent storage that remains even if the Pod is replaced.

- Ordered, Graceful Deployment and Scaling: Pods in a StatefulSet are created and scaled up sequentially (e.g.,

0then1, then2). Similarly, they are terminated in reverse order (2then1, then0). This is crucial for maintaining consistency in stateful applications. - Stable Ordinal Index: Each Pod has a stable ordinal index that identifies its position within the set. This index is maintained across rescheduling.

When to Use StatefulSets:

- Databases: Managing databases like MySQL, PostgreSQL, Cassandra.

- Distributed Systems: Deploying distributed data stores like ZooKeeper, etcd, Kafka.

- Applications Requiring Stable Network IDs and Persistent Storage: Any application where consistent identity and data persistence for individual instances are critical.

Think of it like this: Imagine a row of numbered lockers. Each locker (Pod) has a unique number (hostname) and its own dedicated space inside (persistent volume). If a locker needs to be replaced, the new locker will have the same number and access to the same internal space.

2. DaemonSets: Ensuring a Pod on Every (or Some) Node

Sometimes, you need to run a specific agent or utility on every (or a selection of) node in your Kubernetes cluster. This is where DaemonSets shine.

Key Characteristics of DaemonSets:

- One Pod Per Node (by default): A DaemonSet ensures that exactly one copy of a Pod runs on each node in your cluster.

- Node Selectors: You can use node selectors to target specific nodes where the DaemonSet Pods should run.

- Automatic Pod Management: When a new node is added to the cluster, the DaemonSet controller automatically deploys a Pod on that node. When a node is removed, the corresponding Pod is also removed.

When to Use DaemonSets:

- Log Collection: Running agents like Fluentd or Elasticsearch to gather logs from all nodes.

- Monitoring Agents: Deploying node-level monitoring tools like Prometheus node exporter.

- Network Utilities: Running network agents or proxies on each node.

Think of it like this: Imagine a cleaning crew where one cleaner (Pod) is assigned to each building (Node) in a complex. The manager (DaemonSet controller) makes sure every building has its assigned cleaner. If a new building is added, a new cleaner is automatically assigned.

3. Jobs: Running Finite, Batch Tasks

Unlike the continuously running applications managed by Deployments and StatefulSets, Jobs are designed for finite tasks that run to completion. Once the task is finished, the Job is considered complete.

Key Characteristics of Jobs:

- Run-to-Completion: Jobs create one or more Pods and ensure that a specified number of them successfully terminate.

- Restart Policies: You can configure how failed Pods within a Job are handled (e.g., never restart, restart on failure).

- Parallel Execution: Jobs can be configured to run multiple Pods in parallel to speed up task completion.

When to Use Jobs:

- Batch Processing: Running one-off scripts or data processing tasks.

- Database Migrations: Executing database schema updates.

- Backups: Performing scheduled or on-demand backups.

Think of it like this: Imagine assigning a set of tasks to a team of workers (Pods). Once all the assigned tasks are completed successfully, the job is done, and the team can be dismissed.

Conclusion

StatefulSets, DaemonSets, and Jobs are powerful tools in the Kubernetes ecosystem, each designed to handle specific types of workloads. By understanding their unique characteristics and use cases, you can effectively manage a wider range of applications and tasks within your cluster. As you continue your Kubernetes journey, experimenting with these controllers will be key to building robust and scalable solutions.